AGI is our last invention.

And that is both scary and exciting at the same time.

The summer of 2017 was a weird one. It’s the summer I decided to quit my job at the company I’d built with my partner following an acquisition. Following the announcement, I was by contract on a 6 month-long garden leave, which gave me plenty of time to read, spent time with family and learn new things. But that’s not the sole reason I remember that summer. I was on a 5-day carefree (pre-kids) vacation on a Greek island when I stumbled upon this 2-part blog post of Tim Urban called: “The AI Revolution: The Road to Superintelligence”.

I m a super slow reader, so it took me a couple of days to read this 75-page piece that explained in human language to a non-engineer what is going on with AI and what the future looks like in our lifetimes.

For the next couple of weeks, I was still digesting what I had just read, trying to discuss with friends (no one was really interested in these discussions) and wondering what the f*ck is going on with the world and why isn’t anyone talking about this, except a popular science blog I accidentally stumbled upon and a few others in the wider but tiny back then AI community. This read literally gave me a few sleepless nights.

So because nobody cared, and because I had plenty of time and no idea what my next step would be career-wise, I decided to make people care, making a summary of this article a 40’ keynote presentation and had the chance to discuss the good and the bad of progress on AI on Corporate keynotes I was invited and several industry fora. Within the next 2 years, I delivered a version of that keynote more than 30 times. Here’s a video (unfortunately, the only one that is online is in Greek):

By discussing AI in this context, I realised it wasn’t that people didn’t care but more that they couldn’t really grasp how exponentially different the future might look compared to a more linear approach where most of us are wired to look at the future. I guess it would be the same as discussing the impact of smartphones in our daily lives in 2005. No one would give a damn.

The key points of this read kept puzzling me and kept me thinking for the years to come. The gist:

Looking at the progress of AI there are only two futures eventually: Extinction of human civilization or immortality. It all depends on the goals of this piece of software that is going to reach AGI (Artificial General Intelligence or Human Level AI), the so called “Alignment” of goals. Based on experts’ predictions, not mine, it is going to happen within our lifetimes. The outcome will be permanent, and extreme.

Btw, for those who want to get deep into the rabbit hole of “Alignment”, there’s this great book by Brian Christian, “The Alignment Problem“.

In the past couple of years, I kind of parked these thoughts on the back of my mind, if there’s such a place, with the belief that these developments will get real later than sooner. It wasn’t just my opinion; The median prediction of reaching AGI combining the optimists and scepticists of the AI Community was around 2040, and according to the techno-optimist Ray Kurzweil (the guy that predicted with accuracy all previous major platform shifts like the internet and smartphones), that date would be closer to 2029.

Like everyone else, I was partly in a denial to accept that, and partly I couldn’t really grasp so I kind of skipped the “how fast is this going to happen“ anxiety.

Enter OpenAI and Chat-GPT.

Until Open AI released Chat-GPT3.5 on November 20, 2022, the first Large Language Model that caught the attention of the world. It’s less than 5 months since its release. It looks like years and seems that the world has changed forever. What is more impressive than the technology and its use cases, is the acceleration of progress since its release.

Look at this timeline from “There’s an AI for that“, an aggregator documenting new tools and capabilities that are currently in development. In March alone, we added 194 new AI Capabilities vs the entirety of 2022, where we produced 64 new capabilities. Do you see how the pace of change is ramping up?

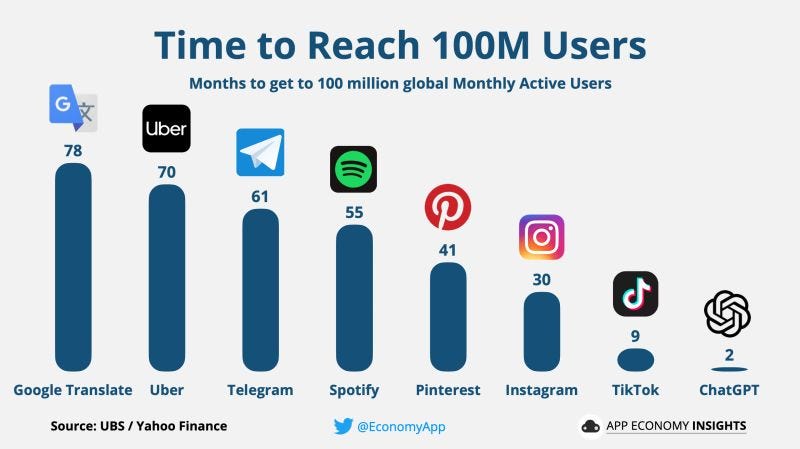

And it’s not just that we’ve been increasing the supply of available software; demand is on unprecedented levels. Chat GPT was not so much a scientific breakthrough (transformers are based on a 2014 paper) but the fact that OpenAI produced a user-friendly way to interact with LLM, a simple chat interface. It took them 2 months to reach 100M users.

For all the above reasons, any attempt to predict the short or long-term impact of AI is probably wrong, given the pace of change. We are not wired, and we don’t have enough input to be able to predict an exponential future. This is a good graph Tim Urban is using for this argument:

So having said that I’d like to share my flawed and imperfect thoughts about the future given the recent developments.

Alignment and Concerns for Our Long-term Future

Before that, a disclaimer; I m not fond of any Maximalist view on the matter. There is a super optimistic school of thought that is most excited about the future. Kurzweil is a good representative of that school, and you can listen to this podcast to get the gist:

Here’s a short GPT summary of his book “The Singularity is Near“:

And then there’s the exact opposite school of scepticists like Eliezer Yudkowsky. I’d like to spend a bit more time on this camp because I think it’s underrepresented in the public discussion and because, while in the middle, I tend to lean in a bit more with this thinking.

What these guys are saying is that the most important discussion right now is the one of “Alignment”. In human language, alignment has to do with the unsolved challenge of how do we create AGI software that is 100% aligned with human goals, making sure that AI will never turn against its creators.

Any other discussion right now is unimportant or at least less important. Even if there’s a 1% risk of lack of alignment, it’s too big to handle as humanity. Any discussion about climate, politics, inequality, peace, human rights, or scientific progress [insert any burning platform of the current public discussion] is less important than this. Let me explain why:

IfWhen we create an intellect that is more intelligent than we are and is aligned with human goals, there will be a solution for most or all of these challenges.IfWhen we create an intellect that is more intelligent than we are and is not aligned with human goals, non of the current challenges are important in the face of the extinction of our species.

Notice that this is not a question of if but a question of when. And most scientists agree that this is going to happen in our lifetimes. A few paragraphs ago, I mentioned that the 2015 median prediction of AI researchers to reach AGI was 2040. A new survey that ran post-Chat-GPT rollout got that date closer to 2029 (closer to Kurzweil’s prediction). Now weighing in on how bad humans are at predicting the future, I would bet that AGI is a lot closer than 2029. Here’s a comprehensive view of these surveys. There are multiple published, with different questions and varying results. One thing is sure, most agree that this is happening before 2060, and smart people like Elon Musk and others say they would be surprised if we don’t reach AGI before 2029.

If this is not scary, let me make it a bit scarier for you.

1. We lack a common definition of Artificial General Intelligence (AGI). Our old-school definition of AGI was the Turing Test, but here’s the thing: Any LLM that is now publicly available is passing the Turing test. So we need to find a new definition of AGI, which we currently don’t have. Here’s the CEO of OpenAI, Sam Altman, discussing with Lex Friedman if Chat-GPT4 is sentient or not. None of them has a definite answer, and one of them is basically the creator of this program 😱. Here’s the excerpt from the discussion:

2. AGI is just a line we humans have drawn that has zero importance for a superior intellect. Here’s why; A piece of software that has the ability to autonomously learn new things and self-edit its own code has no reason to stop when it reaches AGI (whatever that means, as we don’t have an exact definition). it will be like a train that is passing in front of us.

Last but not least, we don’t need to reach AGI to face the threat of extinction in the sense that we perceive AGI as something that mirrors the human consciousness. But a system that is more intelligent than humans and is a potential threat to them doesn’t necessarily need to think like a human as in having feelings, love, getting angry and all sorts of things that make us who we are, and it doesn’t even need to be that smart.

For all the above reasons, the most notable representatives of this camp published an open letter to the AI community, asking the biggest research labs to halt AI research for AGI for the next 6 months. 10.000+ people have signed this letter, among which are Steve Wozniak, Elon Musk, Yuval Noah Harari and others.

I stand with them on this, not the proposal itself, as it’s not realistic and rather symbolic, but with the essence of it acknowledging how big the risk is to our species.

I stand with them, weighing in 2 additional parameters.

How slow society and institutions are to acknowledge and discuss big challenges like this one or similar ones (ie Climate).

How slow & inefficient governments are at keeping with regulation for these systems.

How exponential is the pace of change in this field.

To give you a recent example of this, we are less than 2 months since GPT4 is live, and it seems like old news already. Say hello to Auto-GPT or Baby AGIs.

These are AI Agents that have the ability of (1) Memory (Chat GPT doesn’t remember what you wrote on another chat), (2) Access to the internet through Google (3) the ability to execute tasks like writing code for you (4) connected with not one but 11 Labs. It’s 3 weeks old at the time I write this and they have taken the world by storm.

While at the time of writing these agents are dump, there’s no doubt that they will get much better sooner than later. To grasp the importance of this development, here’s a study published by AI Researchers at Stanford.

Long story short, they threw 25 generative agents on a SIMS-like simulation game, and without any pre-programmed instructions, they formed a society with human behaviours like an agent organising a party and not inviting everyone to it or arranging dates for the party 😱.

It’s like we have opened Pandora’s box, and there’s no way back.

An aligned future can be an exciting one.

While all the above are true, this is just one side to look at AI. There are countless reasons to be excited about the future. Reaching AGI is not just going to help us solve the biggest challenges of today with breakthroughs in Health, scientific discovery, space exploration, advanced automation and even radical life extension, but it will give us answers to questions that we don’t yet have the intelligence to ask.

Let’s take the example of an intellect that is less intelligent than we are, monkeys:

We tend to think about higher intelligence in terms of speed, but what about quality? It’s not that a monkey doesn’t understand how to construct a building. It’s that a monkey will never wonder how a building came to be. Now let’s go back to our case. An AGI will not just answer how the universe came to be, but it will give us answers to questions we haven’t yet made.

And THAT is exciting in so many ways.

With that in mind, it’s really hard to set limits on how positive the future might look if we solve the alignment problem. Let me give you three examples:

Radical Life Extension.

I don’t dare to say the “Road to immortality”, but this thinking leads to that. We, humans, are built of hardware (flesh, bones, water, blood, brain etc) and software, which is basically our memories and our feelings. We seize to exist because of a hardware failure, but there’s no theory that says that this has to happen. So what if we replaced our imperfect hardware with hardware that doesn’t malfunction, or we had the ability to replace a shitty piece of hardware with a new one that is less shitty? Enter immortality, or at least “radical life extension“. Based on that thinking, ageing is a disease we can potentially cure.

Energy Abundance.

In his 2014 book “Abundance: The Future is Better than you think”, Peter Diamantis and Steven Kotler discuss a radically different sustainable future of energy abundance. The book highlights the potential of solar energy as a scalable and sustainable energy source capable of providing abundant clean energy for the planet. They also discuss the progress in solar technology and how it is becoming increasingly cost-effective. At the same time, they challenge the notion of resource scarcity by illustrating how technology can help unlock new resources, such as extracting valuable minerals from seawater or utilizing advanced recycling techniques to minimize waste.

Advanced Automation.

AI-driven automation in various sectors, such as manufacturing, agriculture, and transportation, could lead to increased efficiency, reduced costs, and minimized environmental impact. But if we extend that thinking for a bit, we should start re-thinking the structure of our society and economic systems without “the concept of work”. That is both scary and exciting at the same time.

The Short term impact of AI

Realising that I have too much time in this article discussing the long-term future, the challenges and opportunities of the short-term future are radically different. It’s natural that the current discussion has as a starting point ourselves and the most common concern is “Will robots will take my job”?

Will Robots take my job?

The short answer if you are a white collar, and contrary to what representatives from the biggest actors in AI are saying (Google, OpenAI, Microsoft et al), is probably yes, although it is a complex answer.

Moving forward, to produce the same output, we are going to need fewer engineers, designers, researchers, analysts, project managers, real estate agents, investment bankers, lawyers [insert any similar job function].

That is to produce the same output. But we’ve never stood still as humans, which means that our output is constantly increasing; as such, the answer to this simple question is more complex than we think. The progress in AI will probably result in some professions getting wiped out within a few years, new jobs being created (a good example is the rising demand for prompt engineers) and unprecedented economic growth.

To give you an example from the country I’m coming from, Greece, its tiny but rapidly growing startup ecosystem is going to need 150.000 more engineers in the next 5 years. We are obviously not going to produce 150.000 engineers in five years, but looking at how current AI tools can multiply an engineer’s output, we might need less of them but significantly more than we have now. Again, simple question; complex answer.

Yuval Noah Harari is rather a scepticist in this discussion. In his book “21 Lessons for the 21st Century”, he looks back at the history of humankind and how technological revolutions transformed work, turning us from hunter-gatherers to farmers, to factory workers, to cashiers in Super Markets. He claims that it’s really hard to imagine what such evolution would look like, and he predicts millions of people without a job in the next few decades. He goes a step further and calls them “the useless class”. With that charged term, he attempts to describe a big part of the population that will not manage to retrain themselves to a job market that no one can currently predict how it’ll look like and will be out of job.

This is why there’s an ongoing discussion about a form of Universal Basic Income (UBI) that no one has yet cracked. This is also why many stress the importance of gaming as part of our lives because it’s one thing to solve the challenge of income, but there’s another challenge of how are we going to spend our time in the absence of work (30%+ of our life).

Replacing part of our work time with gaming is a very dystopian version of the future, which I wouldn’t like to be part of. This dystopia is very well described in the movie “Ready player one” a must-watch for those of you that haven’t had the chance to watch it already.

The end of or the Acceleration of Fake news?

But the job market is only one of the major discussions everyone is having right now. The other one is about misinformation and how these tools are arming troll farms with advanced misinformation capabilities in the near future. It’s really scary to think that with minimum cost, bad actors that control hundreds of thousands of bots can use Large Language Models (LLMs) combined with deep fake video and text-to-speech technology to influence the public discussion. And that is probably one of the things that we’ll see in the short-term future, if not already.

But there’s another completely opposite angle to misinformation as these AI agents become a more integral part of our daily lives. It’s not hard to see an AI Assistant working as your primary news source, and if that turns out to be true, then truly personalised news coming from your Assistant may indeed signal the end of misinformation. My opinion is that things will get worse before they get better.

AI is going to have an impact on every aspect of our lives, entertainment, education, geopolitics, defence, energy and more. I will try to cover some of these in my next articles. It is overwhelming and exciting to document the change happening on all these fronts; it is both scary and exciting at the same time.

It feels like we are living in an era where people in the future will look back at it as a defining moment for human civilisation. What remains to be seen is how our generation is going to be remembered.